Strategies to accelerate rendering?

OpenSCAD only uses one core so it won't go above 12.5%. It does use

enormous amounts of RAM so if it causes swapping you will get less than one

core fully used.

The reason it is so slow is that CGAL uses infinite precision rationals to

represent numbers instead of floating point. As soon as you have curves or

rotation most coordinates become irrational, so the numbers explode, eating

up CPU and memory.

On Tue, 16 Jun 2020 at 14:57, Kevig Kevigsp@hotmail.com wrote:

hello,

I am not sure if this has anything to do with the topic but i see you all

look at the programming code for optimizing.

I wonder if there is something in openscad/cgal eating up rendering time.

Rendering the code for my project takes a staggering 5mins 50 secs but the

CPU load stay's at 9% and the GPU load stay's at 5%.

so the computer is almost doing nothing!

(AMD Ryzen 7 3700X 8core 3.59 and GTX 1650S)

it is as if the computer power is not important at all and there is some

other reason why the calculations are so slow.

I did expect a higer GPU/CPU load when the calculations increase

rgrds

Henk

--

Sent from: http://forum.openscad.org/

OpenSCAD mailing list

Discuss@lists.openscad.org

http://lists.openscad.org/mailman/listinfo/discuss_lists.openscad.org

On 6/15/2020 11:53 PM, amundsen wrote:

The first version without polyhedron is still quite slow but it might be in

part because I have an integrated GPU only.

I didn't time that one. But excess objects never helps.

Preview uses your GPU. Render does not.

The second version is indeed much faster. I need to analyze the code!

It's basically the same as yours, except that where you have a

linear-extruded block with a flat top, it has a polyhedron with a

slanted top. (Actually, it seems that the top isn't flat, that it needs

to be split into two triangles. I'm not good enough at visualizing 3D

geometry to confirm that it has to be that way.) The "bot" values are

your original a,d,f,g coordinates, the bottom of each block; the "top"

values are the same XY coordinates but Z is the top of the block.

Because yours uses flat tops and so must stair-step, you need a lot of

tiny blocks to get a reasonably smooth top surface. Since this scheme

uses slanted tops it can get a smooth top surface with many fewer blocks.

On 6/16/2020 7:12 AM, nop head wrote:

OpenSCAD only uses one core so it won't go above 12.5%.

Nit: the fraction will depend on what kind of processor you have.

I've never understood the relationship between "sockets", "cores" and

"virtual processors", but I have 1/2/4 and at the moment OpenSCAD is

topping out at ~30% for me. I assume that it's not reaching 50% because

of competition from other processes.

On Tue, 16 Jun 2020 15:21:27 +0000

Jordan Brown openscad@jordan.maileater.net wrote:

On 6/16/2020 7:12 AM, nop head wrote:

OpenSCAD only uses one core so it won't go above 12.5%.

Nit: the fraction will depend on what kind of processor you have.

I've never understood the relationship between "sockets", "cores" and

"virtual processors", but I have 1/2/4 and at the moment OpenSCAD is

topping out at ~30% for me. I assume that it's not reaching 50% because

of competition from other processes.

So an x86 processor is a heirarchy

At the bottom you have hyperthreads which are individual threads of code

execution. They share the same silicon directly. The idea is that a

processor has a bunch of things it can be doing at once and the front end

of the processor is responsible for keeping as many of those units busy

at a time as it can. Having two threads of execution helps to ensure that

if one of them stalls say waiting for a value not in cache the other can

fill the gaps, or if one is floating point heavy and the other is integer

and so on...

Cores are instances of the above. They usually share the larger caches.

Sockets are physically separate things plugged into the motherboard and

don't share caches but also don't share heatsinks and thus some thermal

limits.

(there is also another layer in there for some things which are managed

by groups of processors like power, but that's not important here).

In the AMD case however (and a tiny number of Intel ones) the socket layer

is a bit more confused because for the threadripper/epyc they are

essentially built as multiple sockets but all one on thing you stick on

the motherboard.

The ARM world is similar except that I don't believe any ARM does

hyperthreading (and there are good architectural reasons why what makes

sense in one kind of CPU design does not in others)

ARM (and one upcoming x86) also sometimes has big/little where some of

the processors are big and fast and eat power and some are slow and don't.

"Virtual processors" is a windows-ism. What it means depends on what

Windows wants it to mean. On a desktop it probably matches hyperthreads

but that depends on what Windows feels like doing, and if you are running

under a hypervisor (which you may or may not magically be depending how

Windows installed and your configuration).

For a current bottom end x86 desktop you'll have 1 socket, 2 cores and

4 hyperthreads. OpenSCAD can use 1 hyperthread of one core, which if the

other thread is idle is typically around 60-70% of the core's total

performance. So your 30% sounds about right.

A perfectly parallelising OpenSCAD would thus be about 3 times faster on

that box than the current one. A realistic number would probably be

nearer twice. In comparison judging by the performance of some other

toolkits I can easily believe that you'd get a thousand fold performance

improvement by using better algorithms. It's just that the availability

of those in open source is limited and writing them is seriously hard

stuff.

Alan

Caution: depending on the exact parameters, I'm sometimes getting

UI-WARNING: Object may not be a valid 2-manifold and may need repair!

I assume that what's happening is that the inside edges of the

individual blocks are interacting badly with the cylinder and sometimes

causing the two to share an edge. For geometrical/computational reasons

that IMO don't make much sense in reality, that's a big no-no. But it

often happens when you position things using math.

The best solution is probably to move the inside of the blocks inward

just a little, so that they cleanly overlap with the cylinder no matter

exactly how many sides the cylinder has.

That is, something like

nudge = 1;

abot = [sin(i) * (enclosure_radius-global_thickness-nudge),

cos(i) * (enclosure_radius-global_thickness-nudge), 0];

My math says that for a 60-sided cylinder the radius to the center of an

edge is 99.86% of the radius to the vertices. In theory, a correction

of 0.2% of the radius should be enough to make them overlap. However,

since the cylinder is 20 units thick, we don't need precision -

adjusting it inward 1 unit is fine.

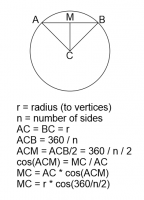

Here's the math, if anybody is interested.

Note: this drawing is a bit poor in that I used 45 degree angles and so

it happens that ACM and CAM are both 45 degrees and AM = CM. In the

general case they won't be equal like that.

Thanks a lot @JordanBrown for the explanation on how to deal with the

"non-manifold" issue. I've never had such a warning in Openscad but it

happened in my slicer and now I understand where it came from!

Good to know the GPU doesn't play a role when rendering too!

--

Sent from: http://forum.openscad.org/

On 6/17/2020 12:43 PM, amundsen wrote:

Thanks a lot @JordanBrown for the explanation on how to deal with the

"non-manifold" issue. I've never had such a warning in Openscad but it

happened in my slicer and now I understand where it came from!

The simplest example is two cubes positioned corner to corner:

cube(1);

translate([1,1,0]) cube(1);

but you can encounter it in any number of circumstances. In this

particular case, I believe the vertical edges of the central cylinder

(which is really an extruded polygon) were periodically lining up with

the vertical edges of the inner sides of the blocks that form the sine wave.

That's not really a surprise, because:

- The cylinder probably has a number of sides that is an exact

multiple of one degree, and - The outer blocks are spaced exact multiples of one degree, and

- The inner edges of the blocks are positioned exactly at the radius

of the cylinder and so exactly at the radius of the cylinder's

vertical edges.